- Overview

- Installation

- Configuration

- Example models

- API reference

- Tips and tricks

- Hardware setup

Multidimensional integrator¶

This demo implements an N-dimensional neural integrator.

This example utilizes a recurrent network. It shows how neurons can be used to implement stable dynamics. Such dynamics are important for memory, noise cleanup, statistical inference, and many other dynamic transformations.

It employs the EnsembleArray network, which provides a convenient method to act on multiple low-dimensional ensembles as though they were one high-dimensional ensemble.

[1]:

import matplotlib.pyplot as plt

%matplotlib inline

import numpy as np

import nengo

from nengo.networks import EnsembleArray

from nengo.processes import Piecewise

import nengo_loihi

nengo_loihi.set_defaults()

/home/travis/build/nengo/nengo-loihi/nengo_loihi/version.py:23: UserWarning: This version of `nengo_loihi` has not been tested with your `nengo` version (3.0.1.dev0). The latest fully supported version is 3.0.0

"supported version is %s" % (nengo.__version__, latest_nengo_version)

/home/travis/virtualenv/python3.6.3/lib/python3.6/site-packages/nengo_dl/version.py:42: UserWarning: This version of `nengo_dl` has not been tested with your `nengo` version (3.0.1.dev0). The latest fully supported version is 3.0.0.

% ((nengo.version.version,) + latest_nengo_version)

Creating the network in Nengo¶

Our model consists of one recurrently connected ensemble array, and an input node for each dimension. The input nodes will provide piecewise step functions as input so that we can see the effects of recurrence.

[2]:

dimensions = 3

tau = 0.1

with nengo.Network(label='Integrator') as model:

ens = EnsembleArray(n_neurons=100, n_ensembles=dimensions)

stims = [

nengo.Node(

Piecewise({

0: 0,

0.2: np.random.uniform(low=0.5, high=3.),

1: 0,

2: np.random.uniform(low=-3, high=-0.5),

3: 0,

4: np.random.uniform(low=-3, high=3),

5: 0

})

)

for dim in range(dimensions)

]

for i, stim in enumerate(stims):

nengo.Connection(stim, ens.input[i], transform=[[tau]], synapse=tau)

# Connect the ensemble array to itself

nengo.Connection(ens.output, ens.input, synapse=tau)

# Collect data for plotting

stim_probes = [nengo.Probe(stim) for stim in stims]

ens_probe = nengo.Probe(ens.output, synapse=0.01)

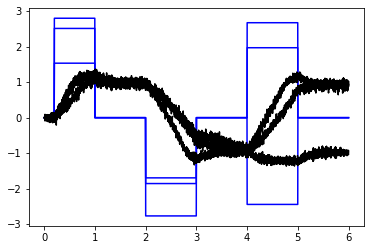

Running the network in Nengo¶

We can use Nengo to see the desired model output.

[3]:

with nengo.Simulator(model) as sim:

sim.run(6)

t = sim.trange()

0%

0%

[4]:

def plot_decoded(t, data):

plt.figure()

for stim_probe in stim_probes:

plt.plot(t, data[stim_probe], color="b")

plt.plot(t, data[ens_probe], 'k')

plot_decoded(t, sim.data)

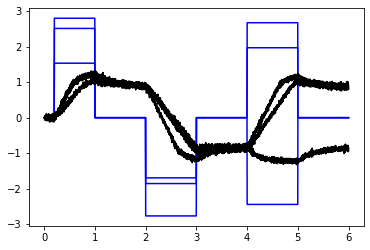

Running the network with Nengo Loihi¶

[5]:

with nengo_loihi.Simulator(model) as sim:

sim.run(6)

t = sim.trange()

/home/travis/build/nengo/nengo-loihi/nengo_loihi/discretize.py:471: UserWarning: Lost 2 extra bits in weight rounding

warnings.warn("Lost %d extra bits in weight rounding" % (-s2,))

/home/travis/build/nengo/nengo-loihi/nengo_loihi/discretize.py:471: UserWarning: Lost 1 extra bits in weight rounding

warnings.warn("Lost %d extra bits in weight rounding" % (-s2,))

[6]:

plot_decoded(t, sim.data)